Cam-ou-flage

Hey Everyone,

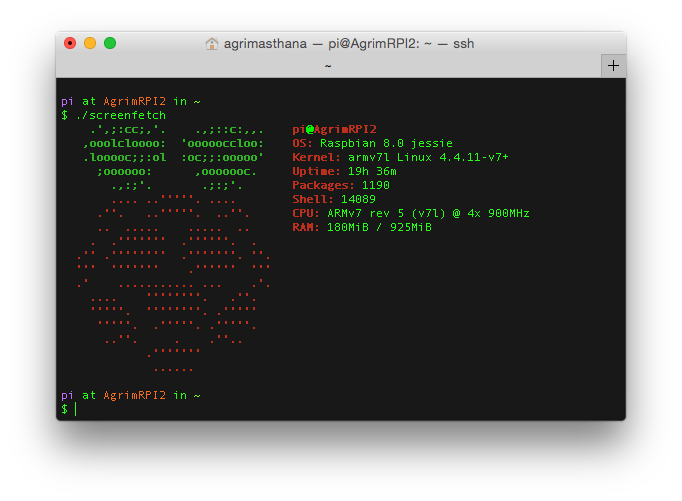

Sorry for the lack of updates, I have been working on something so awesome it should technically be 3 blog posts and not one. It was such an intense project that I ended up bricking one of my Raspberry PIs by corrupting the memory card and causing segmentation faults. The entire fiasco is also what slowed down my progress. But anyways to start off this new year I wanted to shift my focus on upcoming and bleeding edge technologies like OpenCV. The overall idea is to find the most dominant color in a given frame so that if something was to remain camouflaged it would have the best chances with the chosen color. To implement this I used K-means clustering to divide the image into two sections and determine which color occupied the most space. The efficiency of this algorithm improves as we increase the value of K (the number of clusters). But for the sake of speed I chose to use only 2 clusters. Here is what the algorithm looks like

- Capture video using RPI camera

- Stream the video as a supported format MJPEG

- Load the video into OpenCV

- Process every frame as a Numpy Array

- Reduce the size of the Image for easier computation

- Using K Means Cluster create a histogram with K sections

- Determine largest section in histogram

- Render color on 8×8 LED Grid

The solution architecture is as follows

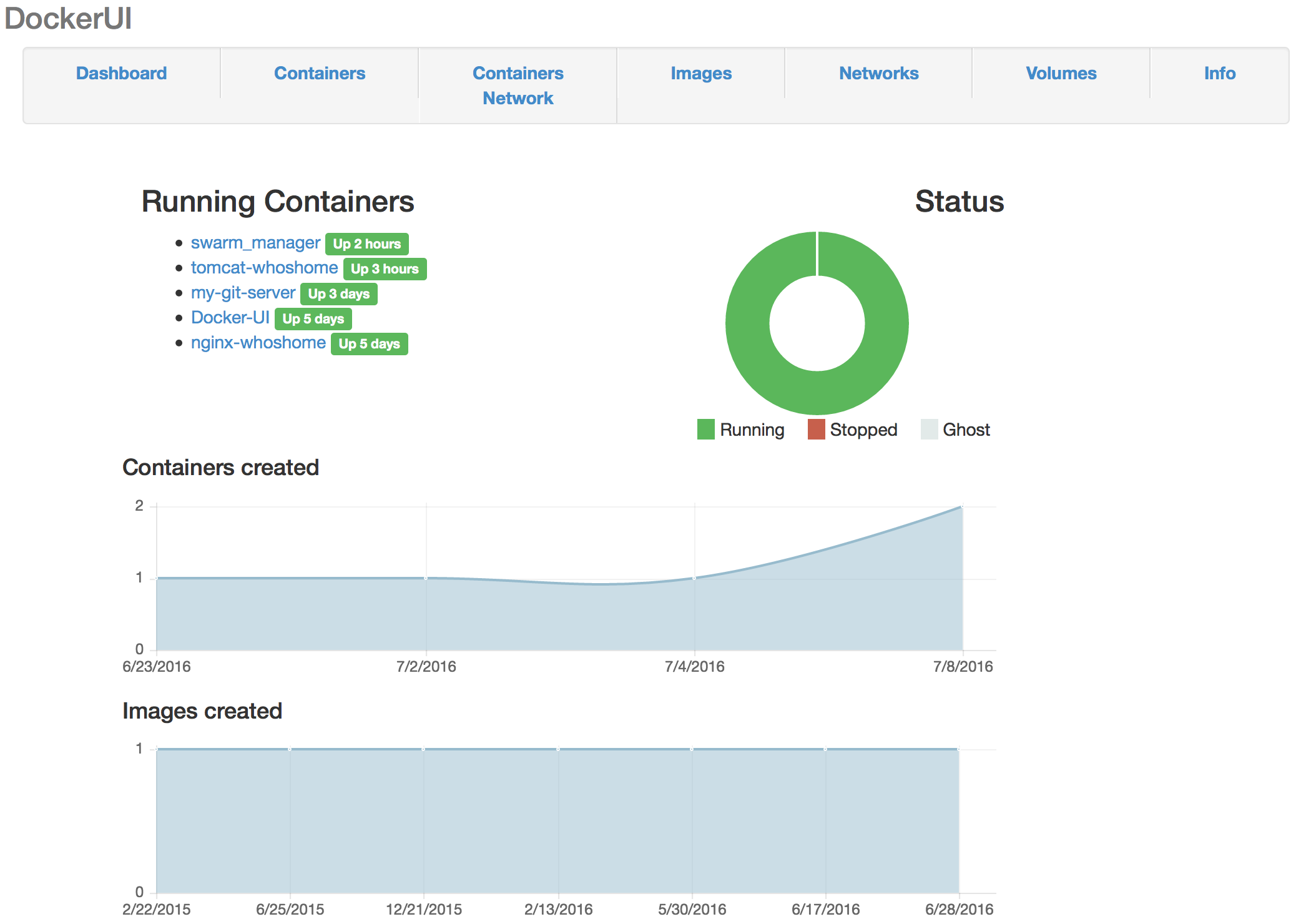

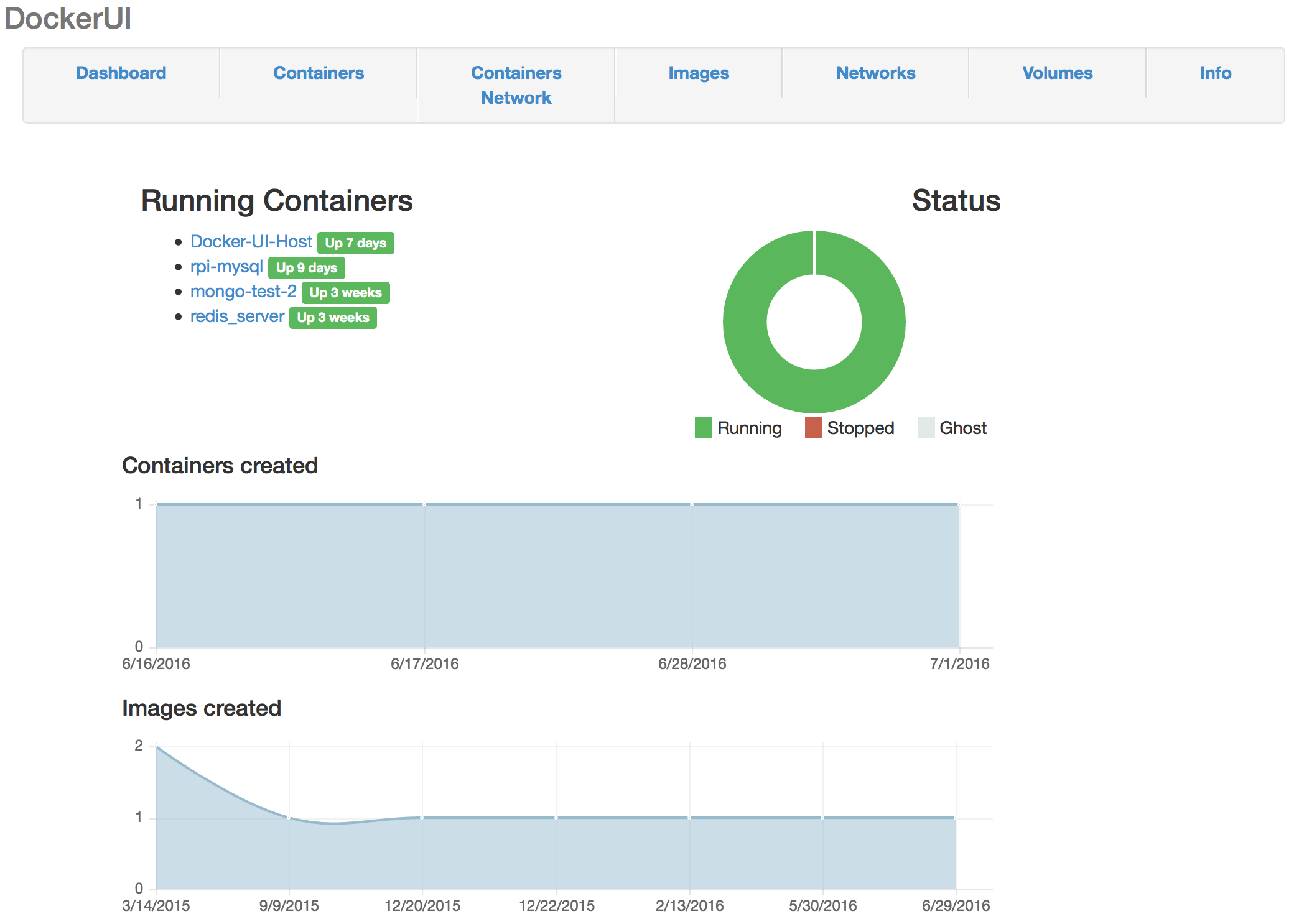

At first, I tried to everything using only my 2 raspberry pi’s but the problems I face was that it took 14 hours to compile! and the performance was incredibly poor. So I thought it was best to delegate the responsibilities to a container in the cloud which was very easy to setup and configure. They are 3 main components in the system.

- MJPEG streamer (here)

- AWS EC2 CV instance

- REST API for the SenseHat (by yours truly)

Check it out in action.

So after installing the MJPEG streaming module on my Pi2 I wrote a simple wrapper shell script for it.

This would create a MJPEG stream at

'http://< rpi-ip >:8080/?action=stream' The next step was to consume this stream in AWS. I created a simple base container using the anaconda framework for python. setting OpenCV was as easy as

conda install opencv . Next is the meat of the project code for which is shared below.

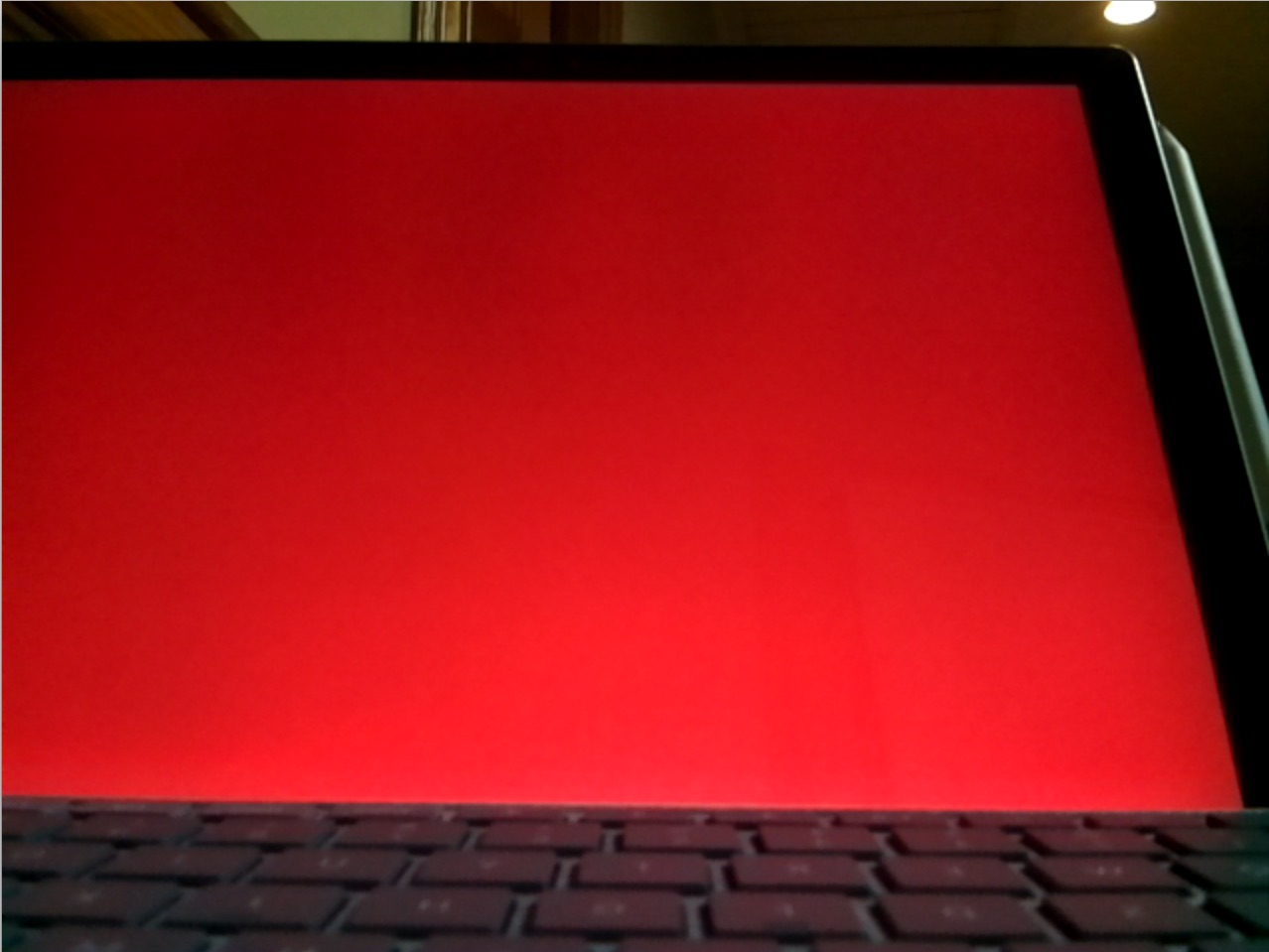

So this is what the EC2 container sees.

And this is the histogram generated after K Means clustering.

As you can see Red seems to be the most dominant color in the frame. You can tell by the amount of time taken for the neural network to compute the dominant color that this project is in an infancy stage. Let me mention the scope for improvement for this project.

- It is fundamentally wrong to use a value of k=2, I need k to be the exact number of different colors

- To provide the color for the LED board I should use a pub-sub system instead of REST as acknowledgment of request is not necessary

- In order to achieve true camouflage only computing to colour is not enough I need to figure out patterns and textures

- Overall performance of the system must improve by using a distributed system approach like (MPI) or tweaking the algorithm

Hope you guys liked my project. Look forward to more bleeding edge projects in the year ahead